Overview

An elusion test is a statistical validation method used in eDiscovery to assess a predictive coding model’s ability to distinguish relevant from non-relevant documents. In principle, an elusion test takes a sample of documents coded “non-relevant” by a model and sets them aside for manual, human review. The percentage of the model’s “non-relevant” documents manually coded by humans as “relevant” estimates the prevalence of relevant documents missed (eluded) by the model during assessment. This percentage, called the elusion rate, helps judge the accuracy of a model’s assessment capabilities.

Classifiers that have a high elusion rate (lower accuracy) should be trained until their rate is at an acceptable level — usually a value that has been defined by the user, or agreed upon by multiple parties.

Perform an Elusion Test

I. Create a New Tag for Your Elusion Test

See Supervised Learning Overview for instructions on how to create a new AI tag used to train a classifier.

II. Confirm Tag and Score Syncing

Navigate to Supervised Learning > Tagging & Saving

Confirm steps 1 through 3 (Tag Sync, Classification, and Score Display) are listed “Ready,” with a green checkmark.

.png)

III. Create Saved Search That’s Below Score Threshold

Under Filters in the Sidebar, expand Document Status and select Has Tags.

Open search building for an Advanced Search.

Toggle the Tag Paths: Has Any Value pill to NOT.

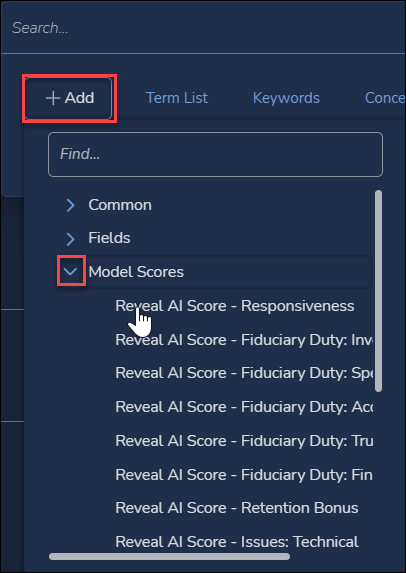

Click Add.

Expand Model Scores.

Select your Responsiveness AI Tag.

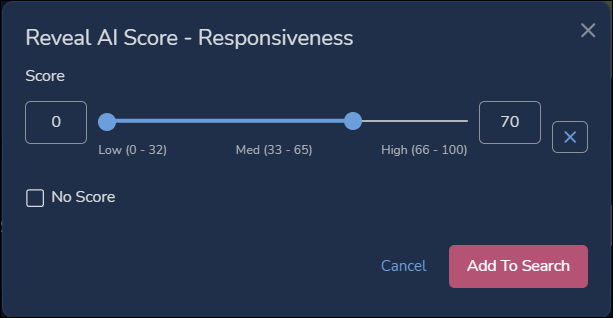

Set the range of scores between zero and your Score Threshold (here, 70).

Usually, Case Owners decide the score threshold. Documents below a score threshold are considered Not Relevant / Not Responsive while documents at or above a score threshold are considered Relevant / Responsive. Including your score threshold as part of your elusion test yields more conservative results.

Click Add To Search.

Run the Search.

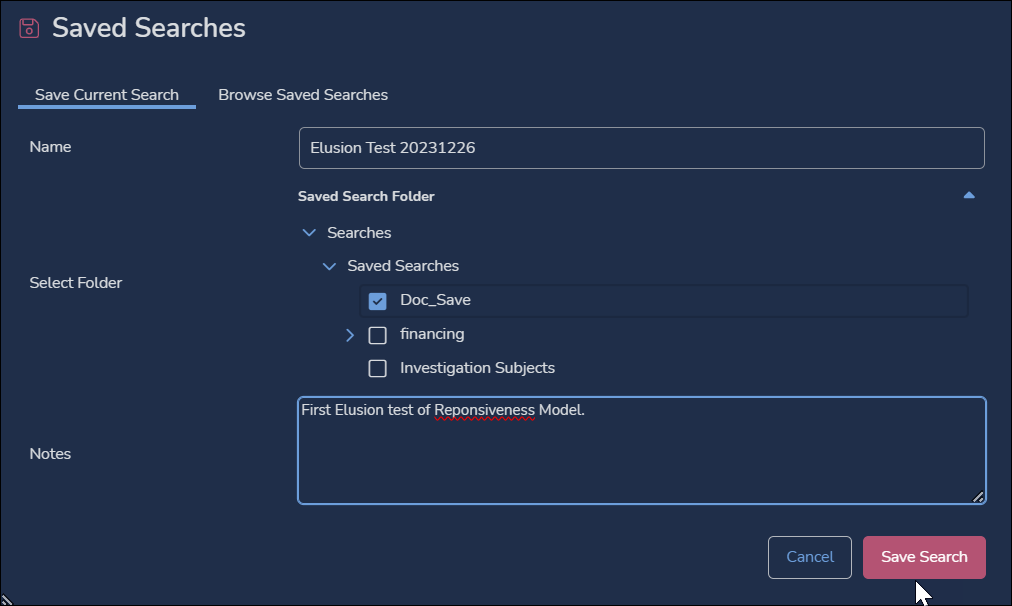

Save the Search as “Elusion Test” with the current date.

IV. Sample Your Documents

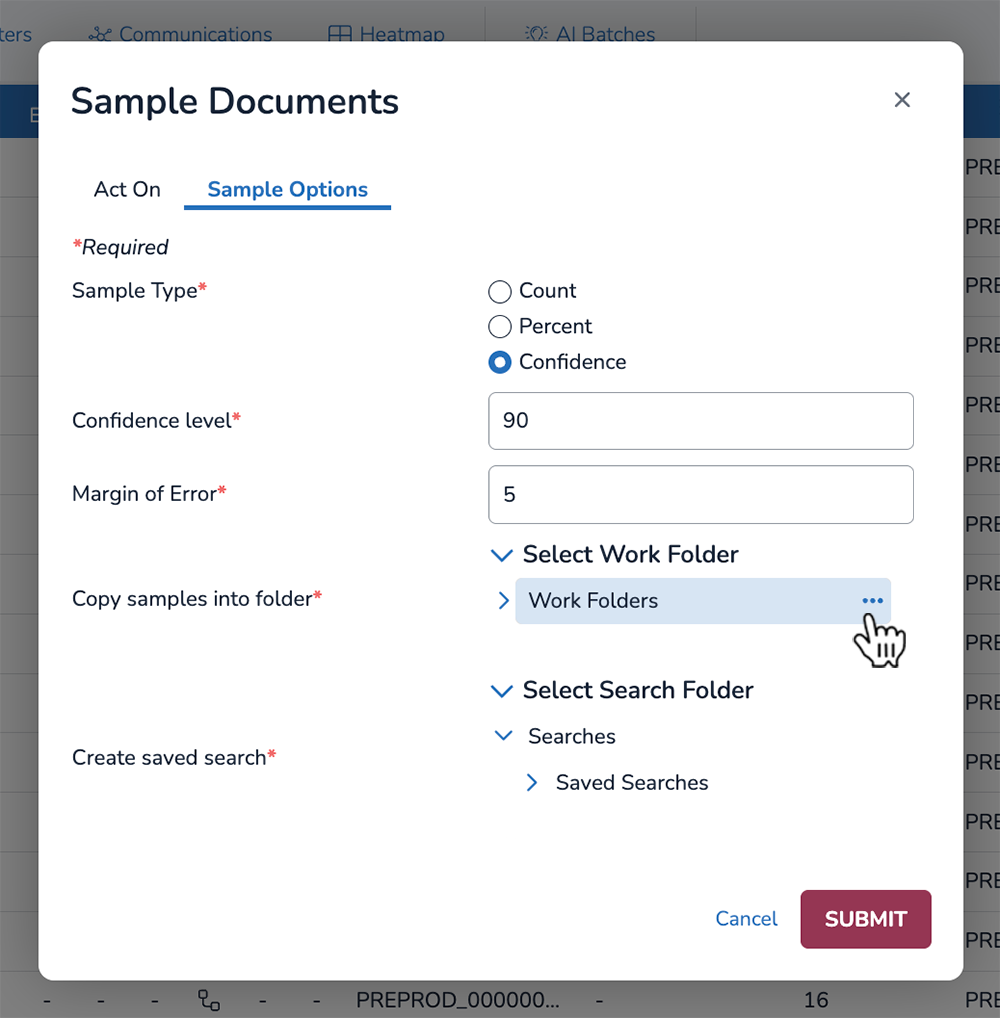

From the Grid bulk action menu, create a Sample using values for Confidence and Margin of Error as appropriate and consistent with your review.

In the Sample Documents modal…

Choose Confidence as your Sample Type.

Choose a Confidence level (90% recommended) and Margin of Error (5% recommended). You can type decimal values into either of these fields.

Select work folder to copy samples, and Select Search Folder to create saved search. For both of these fields, you can either choose from an already existing folder or press the ellipses [ … ] icon to create a new folder.

Click SUBMIT.

V. Review Documents and Calculate Scores

Have a subject matter expert review the above samples as either “Responsive” or “Not Responsive.”

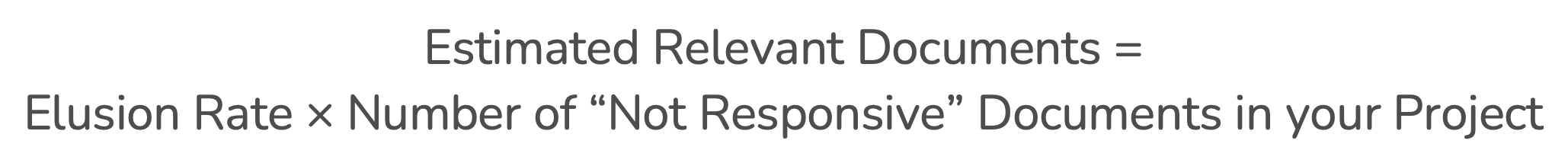

Calculate the elusion rate (percentage) using the below formula:

.png)

Decide whether or not your rate is acceptable. Continuing to train a classifier will improve its accuracy and lower its elusion rate.

Use the elusion rate to calculate how many non-relevant documents may have been coded incorrectly by your classifier.

Final Considerations

Case Owners and/or Project Managers may be responsible for choosing appropriate Score Thresholds, Confidence Levels, Margin of Errors, and determining an appropriate elusion rate for your classifiers. These values can vary depending on risk assessment and project characteristics.

If new training is required, the tags used for your elusion test can be applied to the actual classifier tag, and the following workflow can be followed:

Transfer review tag used for elusion test to the actual review tag.

After tags are synced, kick off “Run Full Process” to force the classifier to rescore documents.

Re-run elusion testing steps above.

Re-assess whether new elusion rate is acceptable.

Other validation methods, like creating control sets or assessing for precision and recall, are also recommended for validating a classifier’s accuracy rating.