- 19 Nov 2024

- 6 Minutes to read

- Print

- DarkLight

- PDF

Creating AI Assignments

- Updated on 19 Nov 2024

- 6 Minutes to read

- Print

- DarkLight

- PDF

Introduction

AI-Driven Batches in Reveal allow you to create batches of documents that can be reviewed to train a Classifier. Using AI techniques, Reveal intelligently selects documents for training, providing users with a simple way to create personalized AI assignments.

However, some Project Administrator may prefer to create trackable pools of custom assignment batches. This allows for greater control and flexibility when training a Classifier using a specific subset of documents. Three methods that are available for users or Administrators to accomplish this, will be highlighted in greater detail.

Prerequisites and Process Flow

The project needs a Classifier that has completed at least one training round.

Project Administrator or reviewers can check out their own batches and review documents that will be used to train the Classifier and update the scores.

Project Administrator can decide to configure and check out a larger AI batch themselves and re-assign the documents to a pool.

Similarly, Project Managers can also run advanced searches and then manually assign the documents to a pool/team/user without relying on the pre-defined AI batch compositions.

Method 1: Each User Checks Out Their Own AI-Driven Batches

This is the recommended method for most review projects. The project Administrator will define the batch settings, and each user will check out their own batch individually. Progress can be tracked from the Assignments window.

On the Supervised Learning tab, select the Classifier to be trained.

Click on the Configure icon on the top right corner of the screen.

Under the Batch Configuration section, click on Choose Settings.

Estimate how many documents reviewers can tag within one tag sync interval.

Note

You can estimate how many documents a reviewer will process during a tag sync interval (defaulted to one hour) and set that as the batch size. This value can be adjusted later in the project to improve accuracy. The tag sync interval determines how frequently tags are synchronized back to the Classifier.

In the Batch Configuration pop-up window, set the Batch Size to the desired number. This value will reflect the actual batch sizes to be checked out and can be readjusted whenever desired.

Set the Retraining Interval to a number that reflects how many documents the group of reviewers can tag within a tag sync interval. For example, if a reviewer team of four people can review 200 docs per sync interval, you will use this estimation to set the retraining interval.

Set the Queue Training Size to be larger than the retraining interval. It’s recommended 3-4 times the retraining interval above.

Click OK to save the settings.

Note

The estimates are important to guarantee that training rounds occur in a frequency that allow the reviewers to generally check out batches with up-to-date scores.

Adjust the Batch Composition. Reveal automatically selects documents for an AI-Driven Batch using one of two methods. These methods, referred to as the Batch Composition, offer two options: Active Learning with Diverse Documents, which selects a small sample that represents the overall document population based on content diversity, and Active Learning with High Scoring Documents, which prioritizes documents with higher relevance scores in review batches.

Click on the Save button at the bottom of the screen to save the settings.

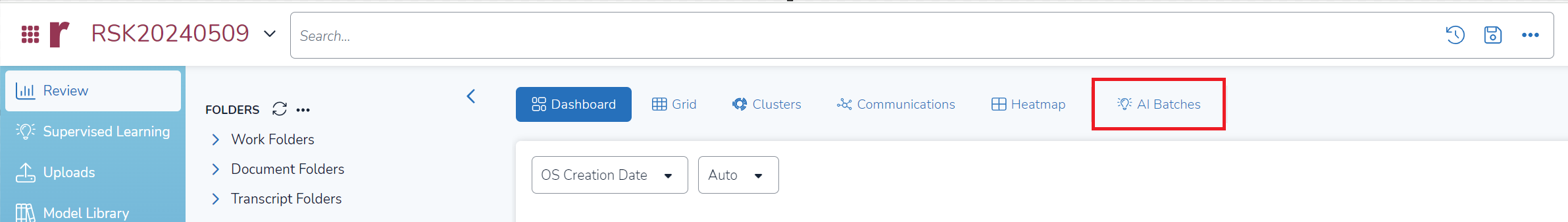

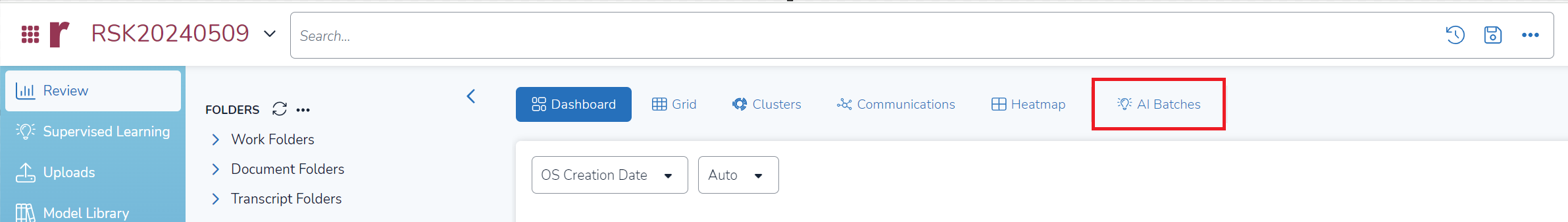

From the Review tab, click AI-Driven Batches.

Select the Classifier to be trained.

Select the Tag Profile that includes the AI tags that reviewers or subject matter experts should use when coding documents for training.

The Queue will display the batching and assignment status once a selection is made.

AI-Driven Queue displays Available if the system is ready for you to create an AI-Driven Batch for the selected Classifier. To create a new AI-Driven Batch, click Select.

After you click Select (in this example, for a new AI-Driven Batch), a message will appear in the Queue area confirming that the batch creation was successful. Once the message is displayed, you can close the window.

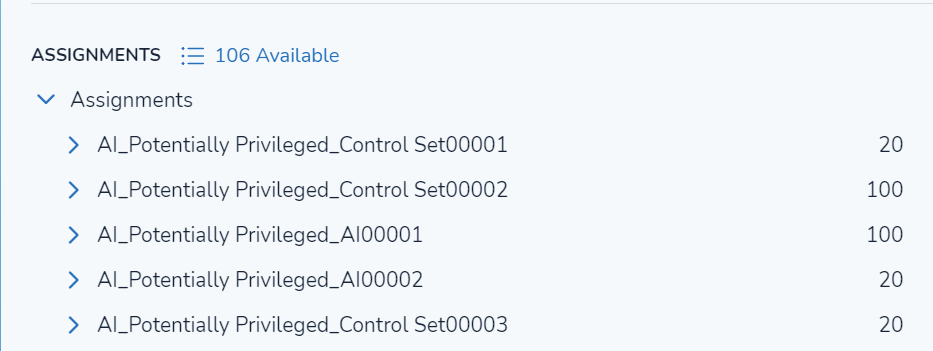

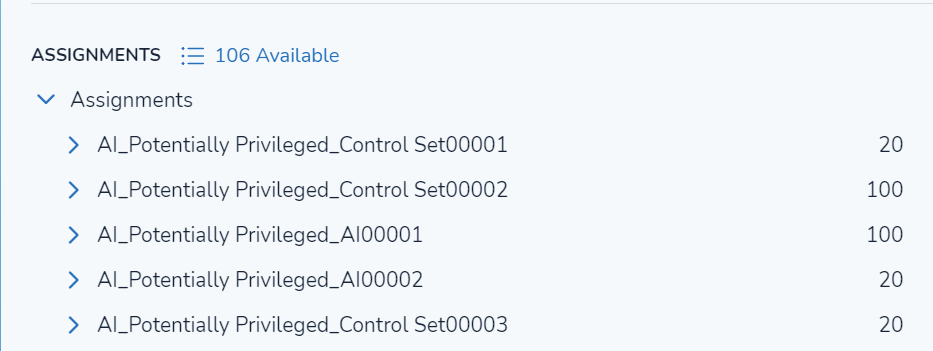

The newly created batch will be shown under the user’s own Assignments session.

Method 2: Administrator Checks Out AI Batch and Assigns Documents Manually

This method is recommended for large review cases with many reviewers or for Administrators who want to have more control over assignment batch distribution. It will involve manual steps like assigning documents to a pool of batches and running training rounds manually before a pool of batches is created.

On the Supervised Learning tab, select the Classifier to be trained.

Click on the Configure icon on the top right corner of the screen.

Under the Batch Configuration section, click on Choose Settings.

In the Batch Configuration pop-up window, set the Batch Size to a number that reflects how many documents the group of reviewers can review during a tag sync interval of one hour.

Set the Queue Training Size to at least two times the value set for the Batch Size to ensure that there will be available.

Set the Retraining Interval to a value that is higher than the Batch Size and Queue Training Sizes (4,000+ for instance).

Note

The idea of this method is that the training round will be run manually by the Administrator before the creation of any new assignments, hence the high retraining interval value that will prevent the machine from doing it automatically.

Click OK to save the settings.

Adjust the Batch Composition. Reveal automatically selects documents for your AI-Driven Batch using one of two methods. These methods, referred to as the Batch Composition, offer two options: Active Learning with Diverse Documents, which selects a small sample that represents the overall document population based on content diversity, and Active Learning with High Scoring Documents, which prioritizes documents with higher relevance scores in review batches.

Click on the Save button at the bottom of the screen to save the settings.

Back to the Classifier screen, click on Run Full Process to initiate a training round.

Note

The Administrator must wait for the current training round to finish before proceeding to the next step. The Classifier will show the word Ready, and its progress can be monitored on the Supervised Learning tab.

The Administrator proceeds to check out an AI batch (as described in Step 11 of Method 1).

The Administrator selects the folder containing all the assigned documents, which will be displayed under the Administrator's own assignment folders.

The Administrator manually assigns the documents to a pool/team/user.

Reviewers check out their batches from the newly created assignments.

After available assignments are reviewed, the Administrator will run a new training round and repeat the cycle (back to Step 10).

Note

The above step is important to guarantee the document queue is replenished and the scores are updated before the whole cycle is repeated, and new documents can be reviewed.

Method 3: Administrator Runs Custom Searches and Assigns Documents

Unlike previous methods, the Administrator will not rely on the predefined AI batch composition options. Instead, they will run advanced searches based on custom criteria (keywords, concept searches, AI scores, etc.) to tailor the batch composition to their specific objectives.

This method is recommended for advanced users who want total control of the documents being reviewed. It will involve the manual creation and distribution of assignment batches based on criteria defined by the project Administrator, and can drastically influence the way models are trained given the composition of documents/scores being altered by the user.

On the Supervised Learning tab, select the desired Classifier to train.

Click on the Configure icon on the top right corner of the screen.

Under the Batch Configuration section, click on Choose Settings.

Set the Retraining Interval to a value that reflects how often the Classifier should be trained.

Note

The value input here is subjective and can mimic the number of documents being assigned to reviewers after searches are run. A value can be set to as low as 40 documents, or as high as 2,000 documents. The Batch and Queue size are irrelevant for this method because the Administrator will not use the standard AI batching workflow described in the previous methods.

Click OK to close the pop-up window, then click Save to save the settings.

At the Classifier screen, click on Run Full Process to initiate a training round.

The Administrator runs a tailored advanced search leveraging Reveal’s powerful search engine.

Note

The above step is only necessary when updated scores are required and can be skipped if searches on scores stored in elastic are not being used.

The Administrator manually assigns the search results to a pool/team/user.

Alternatively, the Administrator can set an auto-assign job that runs periodically and creates the assignments.

Reviewers check out their batches from the newly created assignments.

After the review is complete, the Administrator can repeat the cycle from Step 7.

Note

In this method, the training round frequency will be dictated by the retraining interval value set by the Administrator on Step 4.

.png)