Classifier training can begin once the classifier is created and its batching and other options are configured. The application of AI tags by reviewers will teach the classifier to intelligently select documents and classify unreviewed documents once the training results stabilize over several rounds and render predictive scores comparable to the coding metrics of subject matter expert.

While a new project classifier will learn over several batches, or “rounds,” how to interpret reviewer tagging to predict document scoring, this process can be accelerated by adding a existing AI Model to the Classifier. This process is discussed in How to Build & Configure a Classifier; briefly, it applies existing subject matter expert intellectual property from a similar context to begin assessing the current project. This can expedite the intelligent selection of documents using AI-Driven Batches to either examine the most likely responsive documents as quickly as possible, or focus on borderline positive/negative documents to better train the classifier and solidify the predictions of the AI Model it creates.

Code Documents

As you begin coding documents within your AI-Driven Batches, Reveal will automatically run a training round for your Classifier(s) after a minimum number of documents have been coded. This minimum number of documents is referred to as the Retraining Interval.

Note

The default setting for the Retraining Interval is 10.

The Retraining Interval setting can be adjusted by navigating to the Supervised Learning tab. Then click on the gear icon in the upper right corner of your Classifier. Scroll down to the Batch Configuration section. In addition to Retraining Interval, you can also set a minimum number of Positive examples to code before the training process kicks-off. This Minimum Positive Examples setting can also be found within the Batch Configuration section.

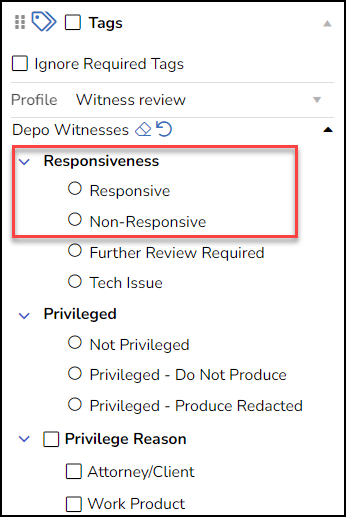

Here is an example of a standard Document Review screen tag pane in Reveal. In this screenshot you can see both the Responsive and Non-Responsive choices for the Responsiveness AI Tag, with the other two choices only there to inform the project manager of any issues encountered during document review.

The reviewer's choice of Responsive or Non-Responsive will be analyzed with the document's content to refine the AI Model's predictive scoring of further documents.

After one or more batches or rounds are completed, the results of this training can be evaluated. The reports discussed in How to Evaluate a Classifier can be used to assess the progress of training, and provide metrics to validate the classifier’s predictive scores.