Download a Brainspace Datasets Report

To download a usage report for either the current period or a specified period for all listed datasets, including Peak Usage for each dataset created or modified under Brainspace 6.7 or later, refer to the following steps to generate a dataset management report.

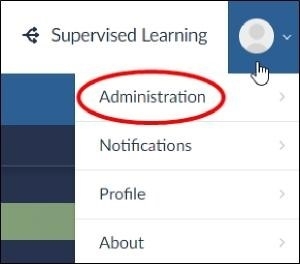

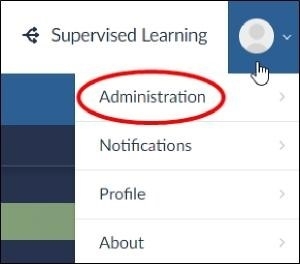

In the user dropdown menu, click Administration:

The Datasets screen will open.

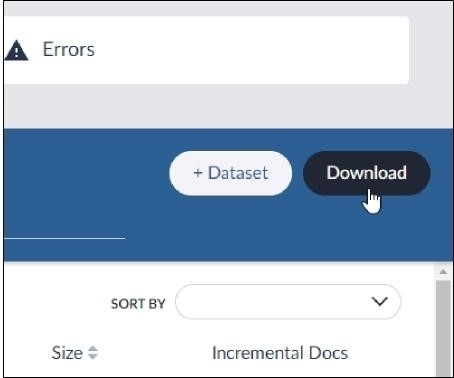

In the Datasets screen, click the Download button:

The Download Dataset Report modal will open, offering a choice of :

.png)

As of Now (the default) downloads the information shown in the screen for the datasets in their current state.

Monthly prompts the user to specify the month range for which data should be output.

.png)

Once Download is selected in the modal, the dataset management report *.csv file will download to your computer.

The last field displayed will be Peak Usage, which will set out the maximum data burst for each dataset. This may be viewed as a text file or opened with a spreadsheet application.

.png)

Note

Datasets before Version 6.7 do not have peak usage recorded per month. Also note that some time periods may return N/A or blank in the peak bytes column. This means that no specific peak usage data are available for the selected monthly range.

Download a Brainspace Monthly Usage Report

The Brainspace monthly Usage report includes the MAC address, license information, and usage statistics for each Brainspace server.

Note

Usage reports do not contain client data

To download a monthly Usage report:

In the user drop-down menu, click Administration:

The Datasets screen will open.

Click the Services button:

.png)

Click the Usage Report button:

.png)

The Available Usage Reports dialog will open.

Choose a usage month, and then click the Download button.

A *.csv file will download to your local machine (x-ref).

Note

If additional usage capacity is required, please email the *.csv file to reports@revealdata.com and contact the Reveal Customer Success Management (CSM) team at support@revealdata.com.

Reference Topics

Monthly Usage Report Fields

The following table describes the fields in the Usage report:

Field | Description |

|---|---|

Server Name | The local server name, not necessarily the well-known name. |

Server Type | Classic server type (e.g., Runtime, Build, Analytics). |

Mac Address | Unique ethernet interface identifier used in Brainspace licensing. |

Customer ID | English language identifier for customer/site as it appears in the Brainspace license file. |

License ID | Software generated value as it appears in the Brainspace license file. |

Available Cores | Number of available CPUs detected by Brainspace. |

Date | Last day of the month for the report or the date that the report was run when downloaded during a current month. |

PEAK_DATASET_DOCS | The peak count of active documents during a current month. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

END_DATASET_DOCS | Number of active documents at end of a month or currently active documents if downloaded during a current month. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

TOTAL_CONNECTORS | Number of connectors configured. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

PEAK_BUILD_DOCS | The peak count of documents analyzed during the course of a month. Only valid for the Analytics (Build) server. Other servers will show - 1 or will be blank. |

END_BUILD_DOCS | Number of documents analyzed at end of a month or currently active documents if downloaded during a current month. Only valid for the Analytics (Build) server. Other servers will show -1 or will be blank. |

PEAK_BUILD_BYTES | The peak count of bytes of data analyzed during the course of a month. Only valid for the Analytics (Build) server. Other servers will show - 1 or will be blank. |

END_BUILD_BYTES | Number of bytes analyzed at end of a month or currently active documents if downloaded during a current month. Only valid for the Analytics (Build) server. Other servers will show -1 or will be blank. |

PEAK_CONFIGURED_USERS | The peak count of configured users during the course of a month. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

END_CONFIGURED_USERS | Number of users configured at end of a month or number of users currently configured if downloaded during a current month. Only valid for the Runtime server. Other servers will show - 1 or will be blank. |

PEAK_ACTIVE_USERS | Number of simultaneously active users at end of a month or number of simultaneously active users if downloaded during a current month. Only valid for the Runtime server. Other servers will show -1 or will be blank. On single-host environments or environments where the Analytics (Build) and On-Demand Analytics servers are the same, unexpected server counts may appear. On deployments where datasets were loaded from disk, documents will not count against the PEAK_BUILD_DOCS, END_BUILD_DOCS or TOTAL_LIFETIME_DOCS until they are submitted for analysis (build) on that environment. If a document is submitted for |

analysis to update, add, or delete metadata fields, it will be counted multiple times against the TOTAL_LIFETIME_DOCS but only once against all other _DOCSmetrics and in the current Brainspace UI’s Active document control. | |

TOTAL_LIFETIME_DOCS | Total number of documents actually analyzed. Only valid for the Analytics (Build) server. Other servers will show -1 or will be blank. |

PEAK_INACTIVE_DOCS | The peak count of inactive documents during the course of a month. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

END_INACTIVE_DOCS | Number of inactive documents at the end of a month or currently inactive documents if downloaded during a month. Only valid for the Runtime server. Other servers will show -1 or will be blank. |

Dataset Reports

Aliases Report

Provides a list of all the email address aliases within the dataset. (This is generally used by Brainspace, and isn’t a particularly useful report for users. Brainspace recommends using the Person report for alias listings.)

Archive Report

Detailed report of the most recent import or transfer of data.

Batch Tools Version Report

Contains detailed information regarding which Batch Tools version was used to create the dataset, including hostname, mac address, and PID information, as well as history for each incremental build or full build.

Boilerplate Report

Provides a list and occurrence count of all the unique boilerplate text identified during ingestion.

Build Error Log

Provides a detailed log of all the build errors encountered during ingestion.

Build Log

Provides a complete detailed log of all the ingestion steps during the build process.

Clusters Content

Lists all of the document IDs (for example, Control Numbers) for the ingested documents and maps them to a leaf cluster ID.

Clusters File

Contains the following cluster tree information: Cluster ID, Parent Cluster ID, Count of Documents in Cluster, Intra-cluster Metric, Cluster Type, and Folder Name.

Deleted Documents Report

Log of document IDs for documents deleted from a connected Relativity® workspace if Delete toggle is enabled for Relativity® plus connector.

Document Counts

Provides summary document count statistics for the dataset including how many documents were fed into Brainspace for ingestion, how many were ingested, how many were skipped, number of originals, exact duplicates, near duplicates, etc.

Extended Full Report

Includes all of the overlay fields and values from the Full Report and additional language detection fields BRS Primary Language and BRS Languages.

Full Report

Includes all of the overlay fields and values which can be overlaid into a Third Party system such as Relativity® either manually through the Relativity® Desktop Client or automatically by enabling Overlay within the Configuration screen within the Dataset Settings tab.

Import Error Archive

Compressed file that contains one or more of the files that failed to import.

Ingest Error Details

Text report containing more details about the errors in the Ingest Errors report.

Ingest Errors

*.csv report containing errors that occurred during ingestion with the location of the documents that caused the error.

Person Report

List all of the “Persons” automatically or manually created (via People Manager) along with the email addresses (aliases) associated with each person.

Process Report

Summary of the most recent dataset analysis.

Schema XML

The field mapping done via the interface is stored in this file and used to ingest the all of the mapped metadata and text.

Status Report

Summary of the most recent dataset analysis.

Vocabulary File

List of all the unique terms and phrases identified within the set of data during ingestion.

For more details, see Brainspace Dataset Reports.

CMML Prediction Report

The Prediction Report contains one row for each document and provides the following details about the scoring of CMML classifier documents by training round.

Each row in the Prediction Report contains the following fields:

Field | Description |

|---|---|

key | The unique internal ID of the document. |

responsive | This is “yes” if the document has been assigned the positive tag for the classification, “no” if assigned the negative tag, and blank if the document is untagged or given a tag that is neither positive nor negative. |

predicted_responsive | This is “yes” if chance_responsive is 0.50 or more and “no” otherwise. A cutoff of 0.50 used with the predictive model creates a classifier that attempts to minimize error rate. Note that 0.50 is typically not the cutoff you would use for other purposes. |

matches | This is “yes” if responsive is non-null and the same as predicted_responsive. This is “no” if responsive is non-null and different from predicted_responsive. Blank if responsive is null (e.g., the document is not a training document). |

score | This is the raw score the predictive model produces for the document. It can be an arbitrary positive or negative number. The main use of score is for making finer-grained distinctions among documents whose chance_responsive values are 0.0 or 1.0. |

chance_responsive | For predictive models that have been extensively trained, particularly by many rounds of active learning, chance_responsive is an approximation of the probability that a document is a positive example. Values are between 0.0 and 1.0, inclusive. This value is produced by rescaling the score value (x-ref). |

Uncertainty | The uncertainty value is a measure of how uncertain the predictive model is about the correct label for the document. It ranges from 0.0 (most uncertain) to 1.0 (least uncertain). The uncertainty value can be used to identify documents that might be good training documents. Uncertainty is one factor used by Fast Active (x-ref) and Diverse Active (x-ref) training batch selection methods. This value is produced by rescaling the chance_responsive value. |

term1, score1,...term 8, score 8 | The terms that make the largest magnitude contribution (positive or negative) to the score of the document, along with that contribution. |

CMML Consistency Report

The Consistency Report includes rows from the Prediction Report that correspond to training examples for a CMML classifier. It is useful for understanding how the predictive model acts on the documents used to train it. There are two main purposes for the Consistency Report:

To understand which training examples contributed particular terms to the predictive model.

To find training examples that might have been mistagged (x-ref search the “matches” field for “no”).

CMML Round Report

The Round Report for training round K contains one row for each training round from 1 to K. The row for a training round summarizes information about the training set used on that round.

Note

Tags can have more than two values, but only one tag value is associated with the positive category for a classification, and one value is associated with the negative category for a classification. Document with other tag values are not included in the training set.

Note

Documents can be untagged and retagged using a different tag choice. For that reason, it is possible that some documents that were in a training set on an earlier round are no longer in the training set in a subsequent training round.

Each row in the CMML Round Report contains the following fields:

Field | Description |

|---|---|

Training Rounds | The training round number in the series of classifier training rounds. |

Number of Docs | The number of new documents added to the training set for the training round. It includes new documents from both the training batch, if any, and the ad hoc tagged documents, if any. This count does not include documents that were in the training batch on the previous round. The count includes documents with changed labels. The count is also not reduced if some document tags were removed from the previous round’s training batch or converted to non-classification tag values on the current round. For that reason, the Number of Docs count is never negative. |

Net Manual Docs | This is the net number of new documents contributed by ad hoc tagging to the training set on the current round. It takes into account documents that have new positive or negative tag values. It also takes into account documents that had a positive or negative tag value at the end of the previous training round but no longer have that value. For that reason, the Net Manual Docs value can positive, negative, or zero. |

Classification Model | This column specifies the method that was used to create the training batch, if a batch creation method was used on a training round. If the only ad hoc documents were tagged in a training round, the value REVIEW is used. |

Cumulative Coded | The total number of documents in the training set at the end of a training round. |

Cumulative Positive | The total number of documents with positive tag values at the end of a training round. |

Cumulative Negative | The total number of documents with a negative tag value at the end of a training round. |

Round Positive | The net change in the number of positive training documents since the previous training round. |

Round Negative | The net change in the number of negative training documents since the previous training round. |

Stability | This is an experimental measure of how much recent training has changed the predictive model. While presented for informative purposes, Brainspace does not recommend its use for making process decisions. |

Consistency | This is the proportion of training set examples where the manually assigned tag disagrees with the prediction of a minimum error rate classifier (x-ref Prediction Report). |

Unreviewed Docs | Number of documents that are not tagged either positive or negative. Populated only for validation rounds (x-ref). |

Estimated Positive (lower bound) | Lower bound on a 95 percent binomial confidence interval on the proportion of positive documents among the documents not yet tagged as either positive or negative. Populated only for validation rounds (x-ref). |

Estimated Negative (lower bound) | Upper bound on a 95 percent binomial confidence interval on the proportion of positive documents among the documents not yet tagged as either positive or negative. Populated only for validation rounds (x-ref). |