Introducing aji: your expertise, magnified. Quickly launch and intelligently fine-tune your reviews with full context and traceable logic.

aji is Reveal’s GenAI Review engine tailored to attorneys and built for precision, giving speed and control to your reviews. aji doesn’t just process documents at staggering scale, it helps legal teams deliver outcomes they can trust.

Designed with transparency and flexibility in mind, aji provides several ways to create an informative and personalized AI-assisted review experience for all kinds of users.

aji offers real-time feedback to refine and strengthen your Definition—or written explanations of relevant content for your review—so they deliver the documents you’re looking for.

aji tests your Definition against a small sample of your documents, so you can refine your Definition before running aji Reviews at scale.

aji includes in-document reasoning for each GenAI rating and in-text citations for positively rated documents, opening the AI black box.

aji provides a Hybrid GenAI Review mode that significantly reduces the cost of using GenAI for document review.

By leveraging all the above capabilities, review can become a much quicker step in the eDiscovery workflow and offer valuable insight into your datasets—all at a more affordable cost than manual review.

Before you use aji, we recommend reading:

aji Environment and Data Requirements: Outlines certain requirements that need to be met before you can use aji.

aji Billing: Describes how Reveal keeps track of your GenAI usage through aji Units.

aji Unit Allocation – Instance Admin: Details how to assign aji Units to projects, which is necessary before users can perform Calibration Reviews, GenAI Reviews, or Hybrid GenAI Reviews.

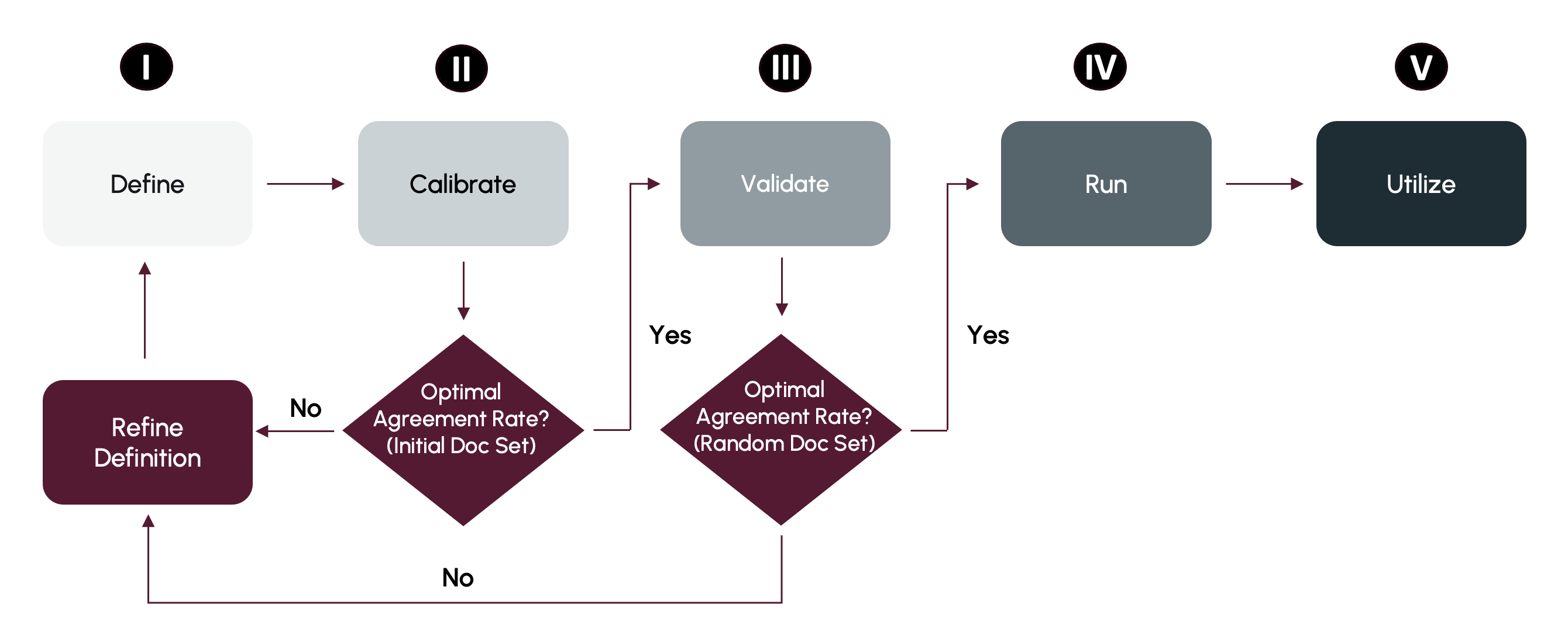

Five Stages of the aji Review Process

A complete use of aji’s features will follow the below sequence of stages.

See the corresponding reference articles for each stage to access in-depth instructions, terminology, and methodology on aji as an entire feature.

I. Define

Create a Definition (a written prompt based off your RFP or subpoena) to describe what you’re looking for in your dataset, and list important case Context that will guide aji in its review. Our AI Advisor can help refine and strengthen your Definition even before it’s used by aji to rate your documents.

Please reference the following articles during your Define stage:

II. Calibrate

Perform a Calibration Review to manually review a small subset of documents according to your Definition, then compare your coding decisions with GenAI (LLM) ratings against that same set. If the Agreement Rate is high (GenAI ratings match your coding decisions) move to Validate. If the Agreement Rate is low, refine your Definition and perform Calibration again.

Documents reviewed by GenAI will contain descriptive reasonings and in-text citations that can be used to understand the GenAI’s thought process and further edit your Definition.

You can also select the Auto-Tuned Definitions option to suggest a new Definition that takes into consideration the results of your Calibration Review.

Please reference the following article during your Calibrate stage:

III. Validate

With your Definition finalized, review against a bigger, random set of documents outside your Calibration document set. Here, you confirm that the GenAI ratings are consistently accurate against your random set for quality assurance. This stage is not required; you may skip to Run if you are satisfied with your Definition and their performance in the Calibrate stage.

The Validate stage follows a similar workflow you use in the Calibrate stage. Please reference the following article during your Validate stage:

IV. Run

Use aji to review your full dataset. There are two Review Modes to choose from:

GenAI Review: All documents will be rated by GenAI (LLM), which will generate reasonings and in-text citations for the rated documents.

Hybrid GenAI Review: Combines GenAI with Reveal’s supervised learning Classifiers (SVM) to rapidly review documents for a faster and more affordable review.

Please reference the following articles during your Run stage:

V. Utilize

aji fully integrates with Reveal’s unified suite of eDiscovery tools, allowing you to combine GenAI Review with data visualizations, supervised learning, and more. You can leverage your documents reviewed with aji by:

Filtering, sorting, and prioritizing documents based on the GenAI ratings.

View reasonings and in-text citations to understand why GenAI gave a documents its rating.

Using aji’s results to guide you on next steps for your eDiscovery workflow.

Please reference the following article during your Utilize stage:

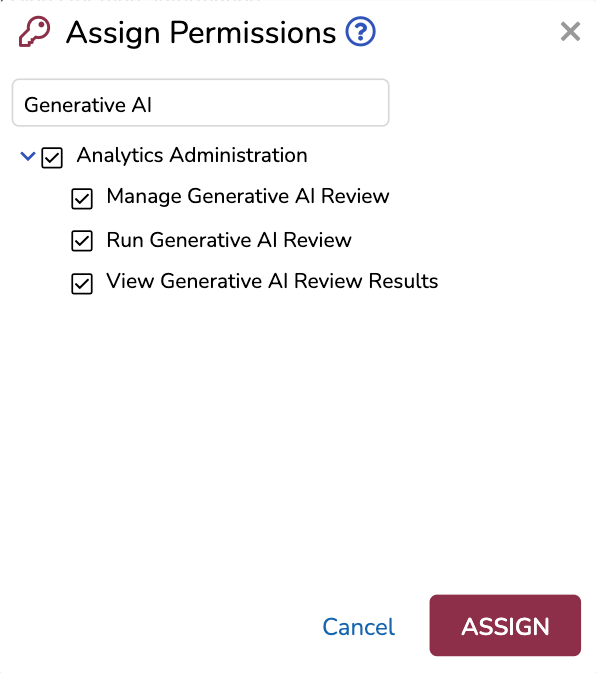

aji Permissions

By default, Instance, Company, and Project Admins have all of aji’s permissions turned on. Three permissions can be toggled on/off at the role level using the Assign Permissions modal in the Permissions screen and searching for Generative AI.

Manage Generative AI Review: Can create and edit aji Workflows (project overview, definitions, context), and can view the results of a Calibration, GenAI, or Hybrid GenAI Review.

Run Generative AI Review: Can start a Calibration, GenAI, or Hybrid GenAI Review.

View Generative AI Review Results: Can view aji Ratings, reasonings, and citations in Document Viewer and Document Preview.

Note

In order to run reviews, you must check both the Run Generative AI Review permission and the Manage Generative AI Review permission.

For further instructions on how to change permissions, see our Create Roles article.