Early Access

This article has been published prior to aji’s GA date. Content may be subject to updates before its official release.

Overview

aji’s Hybrid Review is Reveal’s unique GenAI review mode that prioritizes speed and affordability. Faster and less expensive than Full Review, aji will train a Classifier against your dataset. When it reaches a certain defined condition (round number or precision / recall value), the Classifier will score the remainder of your dataset.

How Does Hybrid Review Work?

Hybrid Review is a process that’s defined by rounds. During each round:

aji will batch a small amount of documents from your project. You can choose how many documents are batched each round.

aji will review each document in the batch using GenAI, giving reasonings and citations for each Definition in your workflow.

aji will use its decisions to train the Classifier.

The Classifier will score all of your documents in your project, giving each document a Predictive Score.

Hybrid Review will continue to run rounds until it meets a stopping condition. The default stopping condition is number of rounds completed, which is a number you can customize to meet your review needs.

Each Hybrid Review will always have a number of rounds completed as a stopping condition, but you can further customize your Hybrid Review by adding any of the advanced stopping conditions, below:

Stop after reviewing all documents above a specific predictive score.

Stop after reaching a specific precision value.

Stop after reaching a specific recall value.

Stop after reaching both a specific precision value and recall value.

If your advanced stopping condition doesn’t get met by the time Hybrid Review meets your number of rounds completed, the review will still stop. Consider the advanced stopping condition as a way to end your Hybrid Review earlier than your total number of rounds.

Note

In order to use a precision or recall stopping condition, you must create a Control Set, then code all the documents in the Control Set.

Hybrid Review Steps

Hybrid Review will generally follow the below steps:

Create Your Control Set – Create a Control Set from the Review Grid.

Review Your Control Set – Code all the documents in your Control Set.

Start Hybrid Review – Perform Hybrid Review and choose your stopping conditions.

Interpret Hybrid Review Results – View the results of your Hybrid Review and determine next steps.

I. Create Your Control Set

Creating a Control Set allows you to select precision or recall values as stopping conditions. You can skip to step III, Start Hybrid Review, if you don’t want precision or recall stopping conditions.

Create your Control Set by following the instructions in our Create a Control Set article. Make sure you use the right Classifier and Tag Profile associated with your aji Workflow.

To find the name of your Classifier:

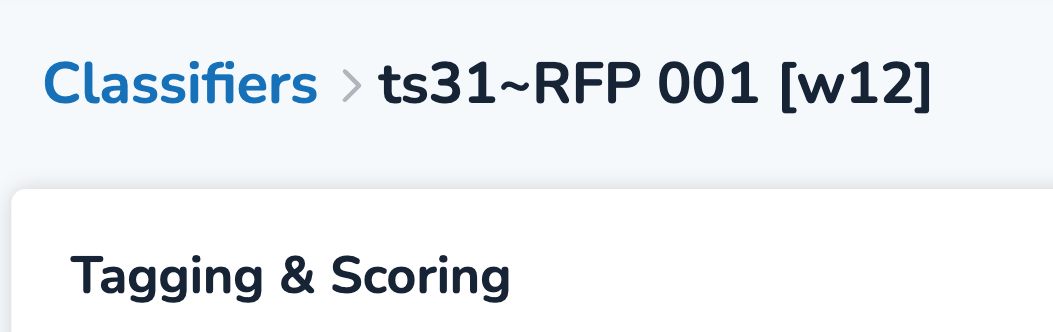

Click the Classifier ID number in the Run Information pane (see “62”, below). This will open up the Tagging & Scoring tab in Supervised Learning.

.png)

In the breadcrumb trail, the name of your Classifier as it appears in the dropdown is everything after the tilde ( ~ ). For the below example, “RFP 001 [w12]”.

Choose the Tag Profile that contains your AI Tag.

II. Review Your Control Set

Find your Control Set under the Assignments folder.

Your folder will have the naming convention [Classifier]_Control Set[BatchID], for example “HR-Payroll_Control Set00001”.

Code all documents in the folder using the correct tag. This should match the name of your Classifier, which you found when creating your control set.

In order for the Classifier to work with your Control Set, you need at least 4 positive and 1 negative documents in the set.

III. Start Hybrid Review

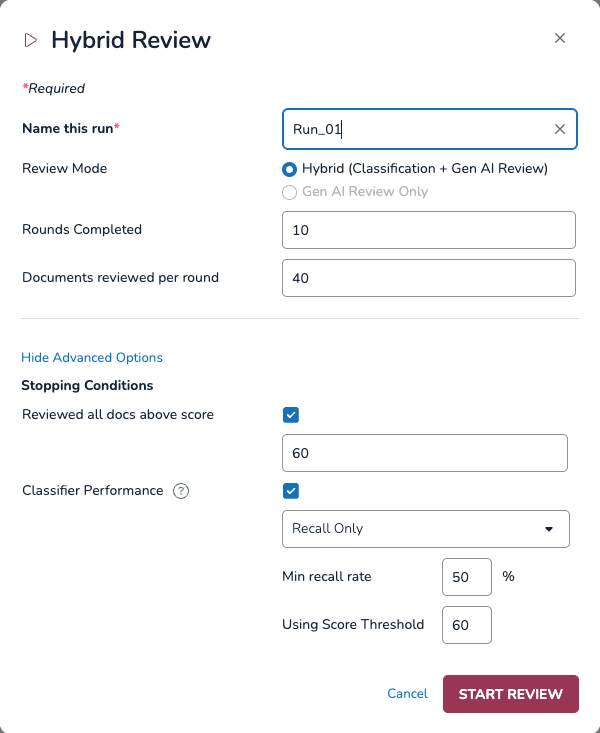

From the Hybrid Run module, click Add. The Hybrid Review modal will appear.

Name this run: Name your Hybrid Review.

Make sure you use a naming convention that makes sense to you and can help with easy reference across all your runs.

Review Mode: This selection will be locked on Hybrid (Classifier + GenAI Review).

Rounds Completed: Choose a number of rounds to complete before the review stops.

Documents reviewed per round: Choose a number of documents aji will review each round.

Advanced Options: Click Show Advanced Options to reveal advanced stopping conditions, which will stop the review even if you haven’t completed the round requirements.

Reviewed All Docs Above Score: Choose a predictive score value. The review will stop if it reviews all documents above your set score.

Classifier Performance:

Recall Only – Stop after reaching a specific recall value.

Min Recall Rate: Choose your recall rate.

Using Score Threshold: Choose your score threshold.

Precision Only – Stop after reaching a specific precision value.

Min Precision Rate: Choose your precision rate.

Using Score Threshold: Choose your score threshold.

Both – Stop after reaching a specific Precision and Recall value. Both values must be met in order for the run to stop.

Min Recall Rate: Choose your recall rate.

Min Precision Rate: Choose your precision rate.

Using Score Threshold: Choose your score threshold.

Note

Additional information about Classifiers and score thresholds can be found in our Supervised Learning Overview article.

IV. Interpret Hybrid Review Results

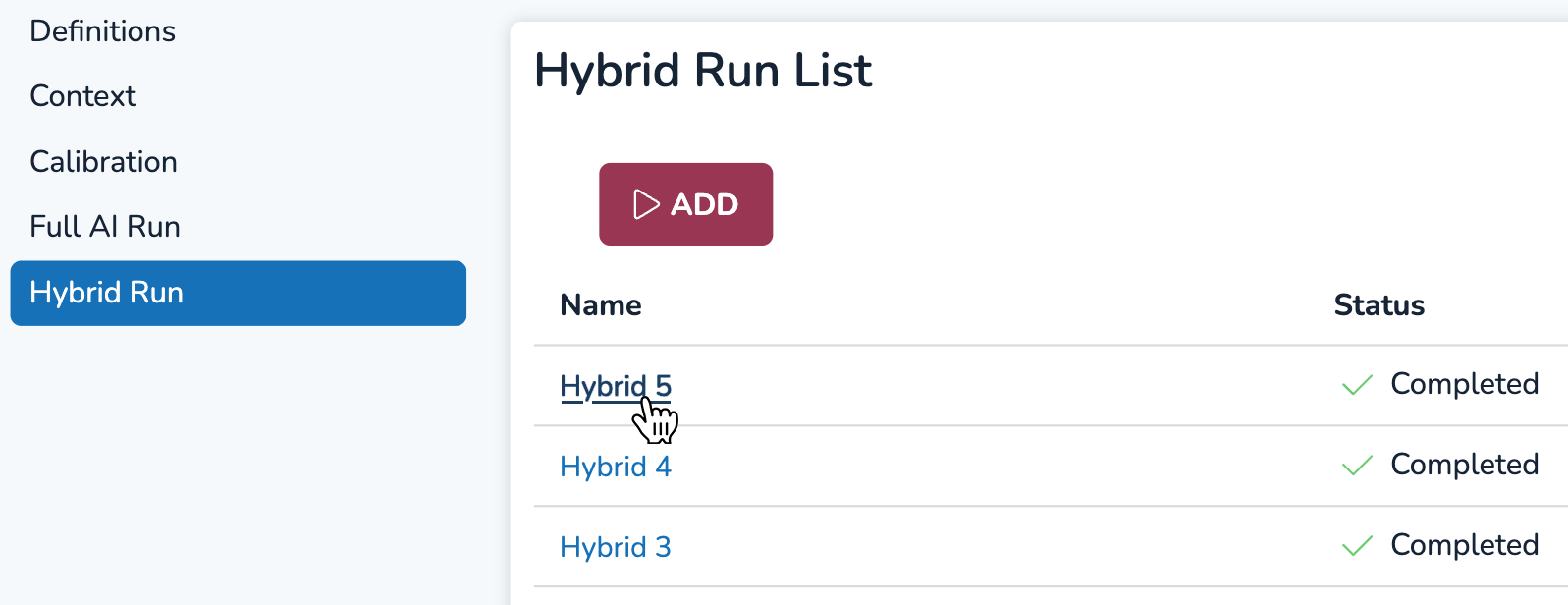

After your Hybrid Review completes, you can view your results by selecting its name from the Hybrid Run List table.

Hybrid Review Data Visualizations

There are three main data visualization tabs for Hybrid Review.

Hybrid Run Results tab

The Hybrid Run Results tab functions similarly to the Tagging & Scoring and Control Set tabs available for view after running a Classifier in Supervised Learning. The graph itself is the distribution of document predictive scores rated by the Classifier, which represents the likelihood (0–100) that the document is relevant to your Definition. A more elaborate explanation of the data in this tab can be found in our Evaluate an AI Model article.

Tagging & Scoring information is presented in the graph, and the total number of positive, negative, and not tagged documents are on the top right information adjacent to the graph. Underneath, you can see how many documents are in your control set, and your total number of documents (all documents) in the run.

The Control Set information is to the bottom right, which provides precision, recall, F1, and richness values.

.png)

Just underneath the graph, the blue slider lets you move between round numbers to see how score distribution changed over time.

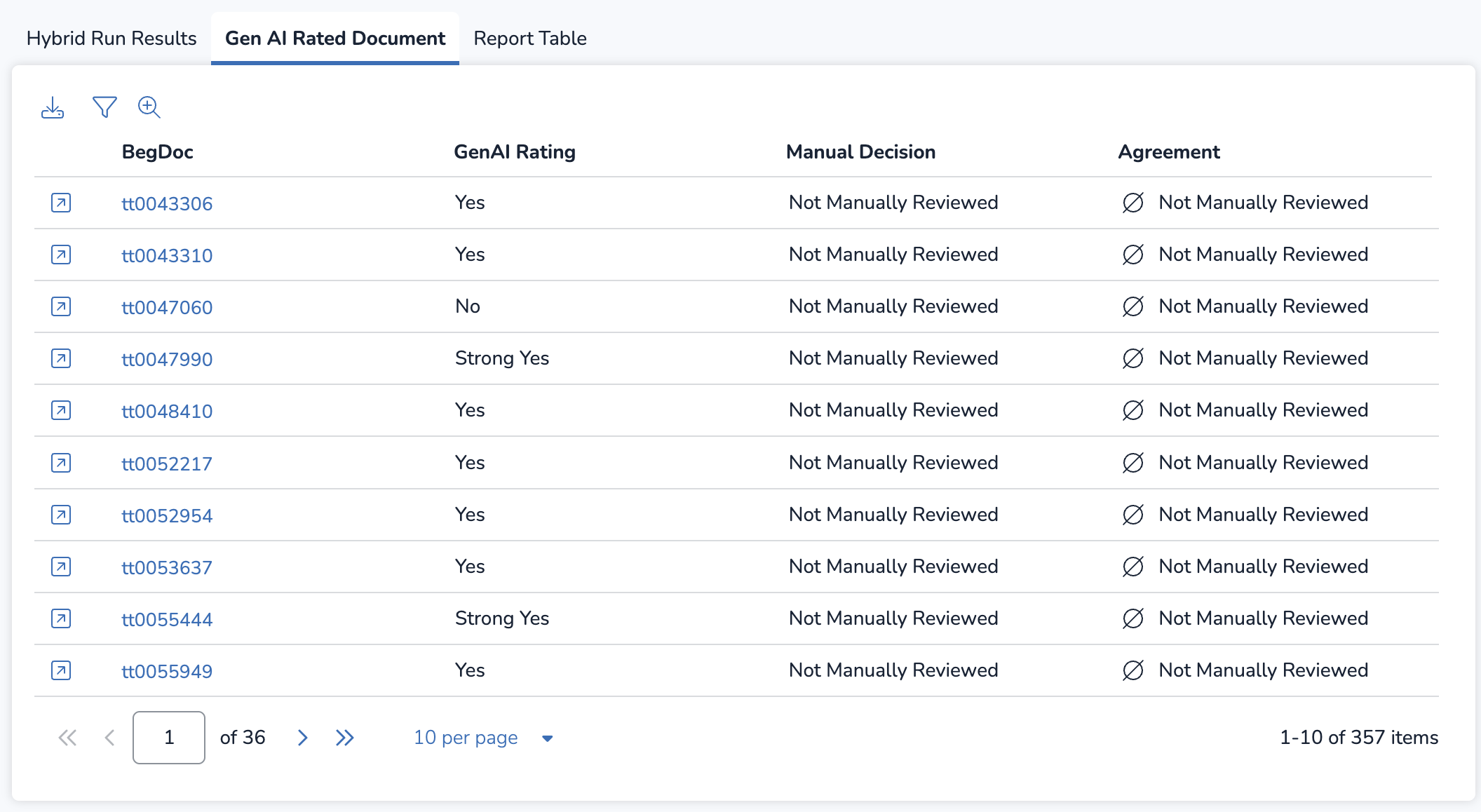

GenAI Rated Document tab

The GenAI Rated Document tab functions the same as the Agreement Report by Document tab in Calibration and Full Review. You can see aji’s ratings and open up documents to read reasonings and citations. See Calibrate aji for more information on this tab.

Note

The only documents visible in the GenAI Rated Document table are ones sent to the GenAI for review each round. In the Hybrid Review modal, you specified how many documents you would send for review by entering a number in the Documents reviewed per round field.

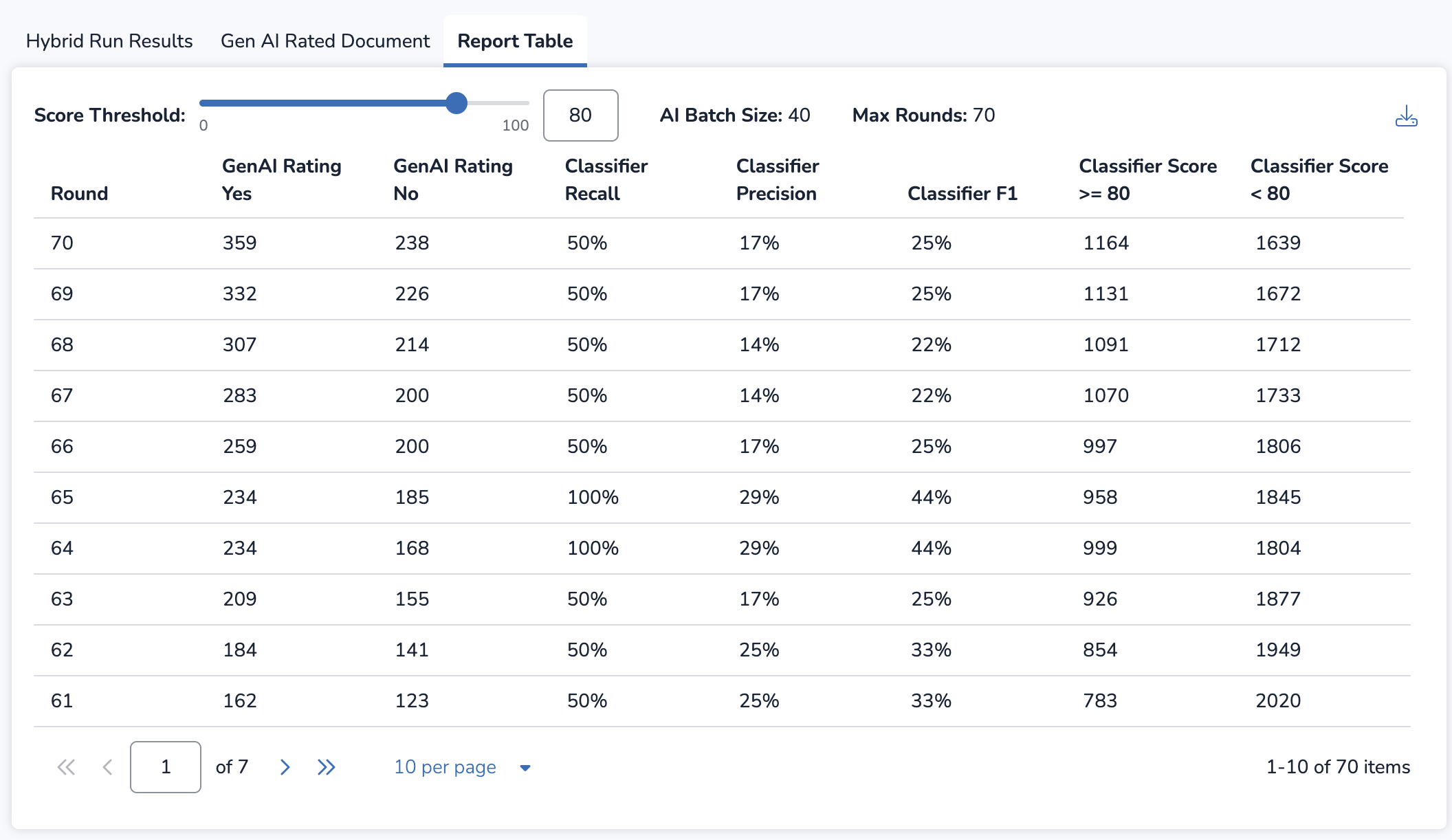

Report Table tab

The Report Table tab provides round-by-round metrics across your entire Hybrid Review. Above the table, you can find the AI Batch Size, or the number you put for the Documents reviewed per round field in the Hybrid Review modal, and the Max Rounds you set for the run as a stopping condition.

The table itself lists two sets of data: data related to the GenAI Ratings and data related to the Classifier’s predictive scores (Classifier Score, Recall, Precision, and F1).

Data related to your Classifier is dependent on a score threshold. The score threshold sets the line between which predictive score values are considered relevant (Yes) vs. not relevant (No). For example, a score threshold of 80 means all documents with a predictive score of 80 or above are considered relevant, and documents with a predictive score of 79 or lower are considered not relevant.

You can change your score threshold using the blue slider, or by entering a whole number value in the text box. Since score threshold influences precision, recall, and F1 calculations, changing the slider dynamically updates all the Classifier values in the table.

In the table, each column represents the following data:

Round: The round number.

GenAI Rating, Yes: Number of documents rated as “Strong Yes” or “Yes”.

GenAI Rating, No: Number of documents rated as “Borderline” or “No”.

Classifier Recall: The recall value of that round, considering your score threshold.

Classifier Precision: The precision value of that round, considering your score threshold.

Classifier F1: The F1 value of that round, considering your score threshold.

Classifier Score ≥ X: The amount of documents equal to or above your score threshold.

Classifier Score < X: The amount of documents less than your score threshold.

The full Report Table can be downloaded as a spreadsheet file by pressing the ![]() download icon in the upper right hand corner of the panel. Keep in mind that you will be downloading only the data for your chosen score threshold number from the slider.

download icon in the upper right hand corner of the panel. Keep in mind that you will be downloading only the data for your chosen score threshold number from the slider.